Duplicate content refers to collections of substantively similar or identical copies of content on the web on either multiple URLs on a single domain or across different domains. This is the exact reason why is having duplicate content an issue for seo. It naturally confuses Google’s sophisticated algorithms, which are programmed to favor recent, superior content. Search engines are having a problem in deciding which of the variations in copy content to crawl, index, and ultimately rank.

Suppose a busy online store mistakenly posts a product page at example.com/product/red-shoe and example.com/red-shoe?sessionid=123. This minute URL variation creates duplicate content. This is known as a duplication problem because search engines view each unique URL as unique.

This issue frequently occurs when the “issue” status in the Google Search Console is reported as “duplicate” due to the absence of a user-defined canonical. The ranking potential of both forms is split because Google is struggling to separate the master page from others, which are so similar.

Using techniques like the canonical tag, this post will explain how to identify duplicate content, why it has a negative impact on your ranking and is mistakenly indexed, how Google’s recent upgrades identify it, and how to avoid it going forward.

What Is Duplicate Content (and What It’s Not)?

Google Search Central defines duplicate content issues as large pieces of content that are close to other content on the web or within the same site. Noticeably, the definition is actually more reliant on the difference based on search engine capability and not human readability. This comprehension of the nuances is necessary for an effective SEO strategy.

How Identical Copies Differ from Near Duplicates

The text is identical in exact copies, word for word. Near-duplicates are a more advanced problem. This content can remain semantically consistent even after being altered by automated processes or basic text rotation. Google’s semantic matching, redesigned approximately in 2025, looks for these finer variations and underlying copying.

Content Reused on Multiple URLs

Duplicate content typically occurs when the same product description or blog entry is displayed on different unique URLs within the same site. Using the same “About Us” page across 10 different service sites is an example. It’s fantastic to write, but it causes monumental duplication issues and makes Google think there isn’t much original value. You need strong SaaS marketing strategies to differentiate your content, rather than copy it.

Harmless vs Harmful Duplicates (print editions, filters, etc.)

Not all duplication is bad. A printer-friendly version or a language translation could be a duplication. They are mostly “harmless” if well-marked-up; however, they can be problematic for search engines. Harmful duplicates are those that consume crawl budget and lead to indexation issues, such as those created by faceted navigation (filters) or track parameters, which generate a lot of low-value, non-canonical, unique URLs.

Why Duplicate Content Is a Problem for SEO

Content duplication not only causes theoretical confusion but also damages your website’s ranking and credibility in search engines. The actual cost of duplicate content issues reveals itself at this point.

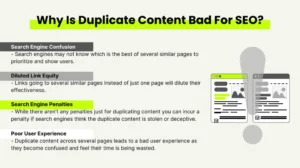

Search Engine Confusion and Ranking Dilution

Duplicate content causes many pages to battle it out against one another for the same keyword rank.

This action splits the significant link equity (transferred authority through backlinks) and topic authority between the competing pages. Instead of one strong page ranking, you’re seeing lots of weak pages, which is also called ranking dilution. The opposite of building strong content clusters, which are supposed to clearly establish the site’s authority for a given topic. Avoid tactics that create an overabundance of exact duplicates or duplicate content, which are usually seen in tactics like link farming that artificially develop links.

Crawl Budget and Indexation Waste

Each page also possesses a crawl budget, i.e., the number of pages Googlebot is going to visit within a specified time frame. Duplicates unnecessarily spend this money. When spiders sift through thousands of copies of reused content, they are likely to miss out on your latest, most valuable content. This translates directly into high indexation issues because Google essentially can’t determine which of the contesting unique URLs is the “best” one to render in the SERPs.

Intent Mismatch & Bad User Experience

An individual can view two or three identical-looking pages from the same site during an information search. Due to this frustrating experience, people lose trust in the website’s authority. Because of this frustrating experience, many stopped believing that the website was authoritative.

Furthermore, identical pages that target the same user intent may display a page that is not exactly what the user asked for in the search result. This can negatively affect engagement metrics, including click-through rate (CTR) and bounce rate.

Identifying Duplicate Content on Google (2025 Algorithm Insights)

With basic word-for-word matching, Google can now detect duplicate material far more efficiently than it could previously. Modern detection methods need to be updated to accommodate this.

AI detection and semantic matching

Google’s capacity to detect duplicates was greatly improved with the 2025 algorithm improvements. While Google formerly examined simply keyword frequency or word-for-word matching, it now focuses extensively on Natural Language Processing (NLP) and semantic similarity.

This implies that they may accept modified text or copied content as semantically equivalent to the original if they are not exact duplicates. This expanded detection technique highlights the pivotal role of AI in SEO, as it is now intended to identify content that offers no new value:

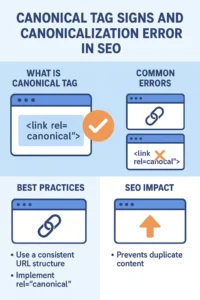

Canonical Tag Signs and Canonicalization Errors

Google’s canonical tag is the primary sign for duplicate consolidation.

This feature, in the form of an HTML document, instructs Google which version URL you would like. However, Google does not always follow the suggestion; it uses the canonical tag as a hint. Suppose you do find yourself with conflicting signals. In that case, you’ll see the GSC protesting about duplicates without a user-selected canonical, which means that Google overwrote your tag or could not select between multiple conflicting canonical tag hints.

AI-Generated or Scraped Content Detection

Google has advanced processes for detecting AI-generated or scraped content, including identifying mass content creation and content scraping. It’s either automatically generated content from a poor AI model or blatant copying from a different site. Google searches for the telltale signs of non-original or low-quality material. Finding information that has been scraped from reliable sources is crucial for maintaining the quality of search results and protecting the original authors.

How to Identify Content Duplication on Your Website

Finding duplicate material on your domain and the internet at large is the first step in solving duplicate content problems. Tools are the fastest way to find out.

Begin by reviewing the Coverage report in Google Search Console for ‘Excluded’ status and ‘Page with redirect’ or duplicate errors without user-specified canonicals. This is your most direct line of defense against issues Google itself has identified.

Another professional method is using site crawlers. You can use software like Screaming Frog to crawl thousands of URLs and then filter them based on the ‘Hash’ or ‘Content’ column to instantly identify exact duplicates or very similar pages regarding structure and text.

Tools like SE Ranking Duplicate Checker or Copyscape are convenient for finding duplicate or highly plagiarized content on your own site and throughout the web (cross-domain content scraping). These utilities help confirm if someone has plagiarized your content.

Siteliner is a simple yet useful tool that quickly computes the internal duplication percentage on your site, catching issues before they become serious indexation problems.

How to Fix Duplicate Content (Action Plan)

After identifying duplicate content, you need a concrete, step-by-step plan to address the problems at their source.

Implement Canonical Tags

The most prevalent fix is the canonical tag. It has the function of grouping the link equity and authority of replicate pages into a single master URL. This process notifies the engines of canonicalization, which, among the URLs, is the master version. Best practice is to include a self-referential canonical tag on the master page and direct all replicate variations to the master URL.

Merge or Consolidate Duplicate Pages

In case of near-duplicate or overly overlapping pages (i.e., too much copied content), the best solution is content consolidation. It involves merging the quality content on the poor-performing pages into one authoritative, robust resource. What you end up with is one authoritative page with a defined purpose. Once merged, ensure you delete the old pages and use redirects to funnel the traffic and link equity of deleted pages to the new, merged, single unique URLs.

Redirect or Noindex Duplicate Pages

Use a permanent 301 redirect when you have consolidated content and the original page is no longer available.

This passes the most link equity.

For low-value pages like deep pagination pages, internal search results, or filter pages that you can’t eliminate but don’t want Google to waste crawl budget on, you can implement a “noindex” tag.

This prevents the page from causing indexation issues while still allowing crawlers to crawl the links on the page.

Handle Scraped or Cross-Domain Duplicates

Suppose you find that your content is being heavily reposted using content scraping on another domain. In that case, the first step is to determine if a cross-domain canonical tag can be used, pointing back to your original, desired unique URLs. If that fails, and the scraping is egregious, you may need to send in a DMCA takedown notice to the host or domain registrar of the offending domain.

How to Find Duplicate Content in the Future

Future duplicate content problems on your website can be prevented by emphasizing structural consistency and logical structure.

Have a clean internal link structure and URL.

Structure is prevention step one. Always target URLs that are clear, concise, and distinctive in describing the purpose of the page.

Ensure your internal linking is always precise and uses the canonical URL form to avoid confusing signals.

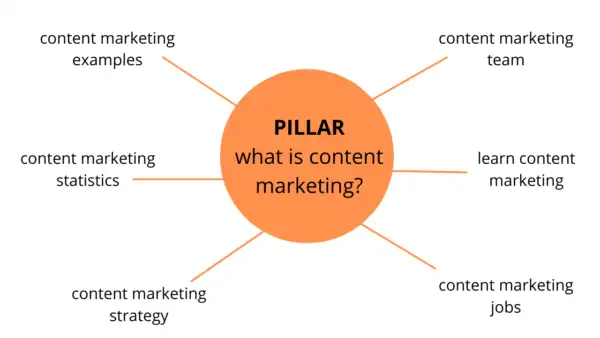

Form Topical Content Clusters

Topic content clustering means that every page on your site serves a distinct purpose.

Having a clear structure in this way eliminates unwanted content duplication and indexation issues by significantly reducing the possibility of two closely matching sites inadvertently competing for the exact keywords.

Perform Regular Audits of Content Using AI Tools.

For large sites, the detection process must be automated. Set up automated regular audits using AI SEO automation tools to actively find duplicate content, along with having a flag for possible duplicates without needing user-selected canonical issues prior to them impacting your rankings.

Case Study: How Fixing Duplicate Content Boosted Rankings

A medium-sized B2B company in the financial technology sector experienced severe indexation issues due to thousands of automatically generated unique URLs on filtered product pages. They had over 40% of their site labelled as duplicate content issues (mainly recycled content).

The action plan included implementing a canonical tag strategy across the site, aggressively noindexing all of the filter pages, and consolidating multiple near-duplicate blog posts (content consolidation). The intention was to remove all the exact duplicates that were consuming crawl budget.

Over a period of three months, the exact duplicate indexed copies dropped by over 70%. On their most important commercial keywords, the immediate effect was a 35% rise in organic traffic and an average rank gain of 10 spots, proving that authority and crawl budget can be obtained by eliminating exact copies and close duplicates.

Final Thoughts

Dealing with duplicate content issues and how to avoid them is a natural part of building a sound, future-proofed SEO foundation. Prioritize two fundamental principles: ensuring every page has a single user intent and following correct canonicalization.

By routinely performing ongoing content audits, maintaining an open internal linking hierarchy, and keeping your canonical tag signals in order, you hold your site’s link equity intact. Don’t let reused content or duplicate copies dilute your strength.

CTA: Audit for duplicate content today and safeguard your rankings.

FAQs

Q1: Does Google directly penalize duplicate content?

Google doesn’t give a direct “penalty” but instead filters or disregards the duplicate pages, causing ranking dilution and wasted crawl budget.

Q2: How do I correct duplicate URLs in WordPress?

Use an SEO plugin like Yoast or Rank Math to add the proper self-referencing canonical tag on your root page, and perhaps noindex or redirect low-value archive pages.

Q3: Is duplicated content translated?

No, content that’s been duplicated is not typically duplicated when you place the proper hreflang tags to let Google know the language and region targeting of each URL.

Q4: How’s a canonical different from a redirect?

A canonical tag tells Google a preferred URL without removing the duplicate page from the live site; a redirect (301) removes the duplicate page from the live site and sends visitors to the new page.

Q5: How to find “duplicate without user-selected canonical”?

Check the Coverage report in Google Search Console, which clearly lists all URLs marked as duplicates by Google without a user-selected canonical status.